Contextual iOS Software

Building Better Products With iOS’s Sensors #

I categorize sensors in the iPhone into three categories: Internal, External, and Compound. An Internal sensor is one that natively runs on the device. While Internal sensors may require external hardware (GPS satellite, Bluetooth beacon), the functionality that captures and processes data is all handled by hardware on the device. Conversely, External sensors rely entirely on systems which capture, process, and send data results to the mobile device via a third party API integration (weather, sports scores, restaurant ratings, commute times, theater listings). A Compound sensor is comprised of at least two Internal and/or External sensors.

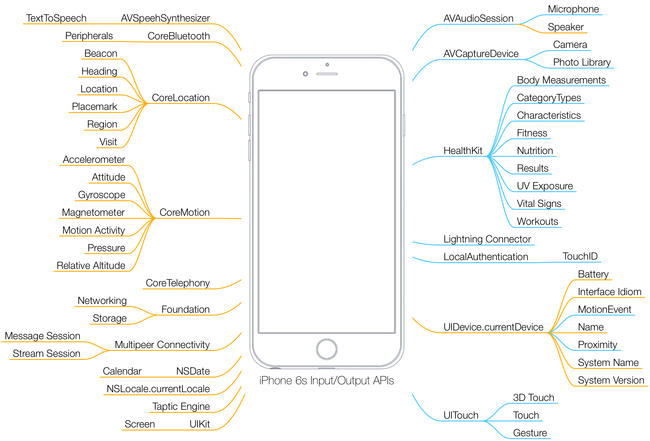

The goal of classifying these sensors is to assist product developers so they can focus on creating the next generation of contextual products. The design process for creating amazing and unique products can be accelerated after comprehending the sensor landscape on a mobile device. The diagram below details the iPhone sensors. The orange lines are output sensors and the blue lines are input sensors.

Let’s Build Some Products #

With this list of sensors, let’s use some compound sensors to build a few products and companies.

- Uber / Lyft / Hotels Tonight = Screen + Location + Calendar

- Shyp = Screen + Location + Camera

- Highlight = Screen + Location + Social Network

- Snapchat = Screen + Camera + Calendar + Microphone

- Running by Gyroscope = Screen + Location + Calendar + Workouts + Camera

- Foresquare = Location + Foresquare API

- iOS 9.3 Night Shift = Calendar + Location

Compound Sensors #

- Focal Point - (Compass + Camera + Mesh Network) Gather information based on an active camera to figure out the focal point of what a crowd is observing. Then create a feed where the product only broadcasts pictures and video closest to what a crowd is focusing on.

- Audio Enhanced Proximity - (Location + Bluetooth + Microphone) Use GPS to determine general location, bluetooth to determine indoor location and audio to determine conversational location creating an experience that detects true indoor device proximity.

- Combining Memories - (Photo + Location + Calendar + Mesh Network) Use location information attached to photos to determine if two people near each other were at any of the same events. If so, create a shared experience between them.

- Mobile Powered Noise Canceling Earphones - (Microphone + Speaker) I’ve detailed my attempt to make this work, but I was unsuccessful.

- Running Pronation Calculator - (Bluetooth + Apple Watch Accelerometer + Calendar) Attach the Watch Sport to a shoe and use the iPhone to trigger recording with the accelerometer for 10 seconds. After recording foot movement; normalize the acceleration data data and use the acceleration curves to calculate pronation and strike type.

These are a few examples I’ve come up with, but this is far from what is possible. Part of designing a great product is understanding your fundamental toolset. The mobile platform gives you access to sensors far beyond that of a desktop computer. Let’s push the limit of what the mobile device can do.